Data Analysis

Andrey Shestakov (avshestakov@hse.ru)

Neural Networks 21

1. Some materials are taken from machine learning course of Victor Kitov

Convolutional Neural Networks¶

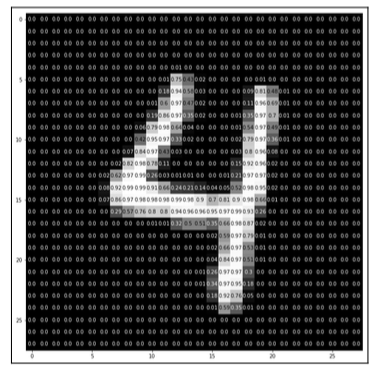

What is image?¶

- Multi-dimentional array

- Key characteristics:

- width

- height

- depth

- range

- Example

- RGB images have depth of 3 (one for each of R, G, B)

- Usually color intencity range is from 0 to 255 (8 bits)

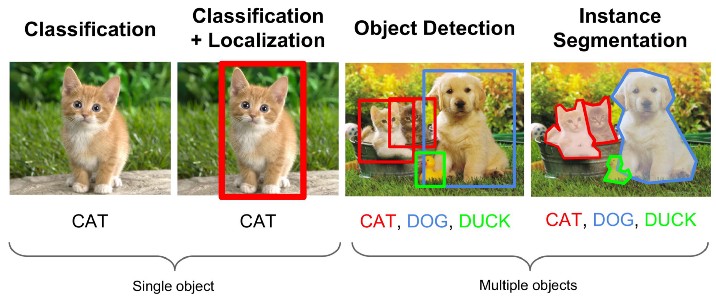

Common Tasks¶

Computer Vision (CV)¶

- There were (are) many things before CNN Hype

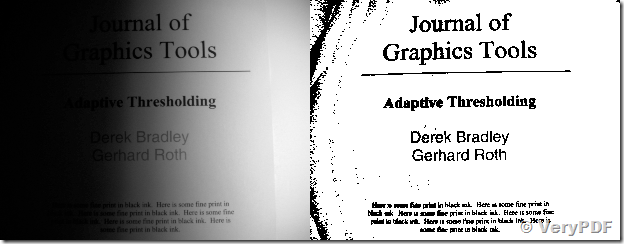

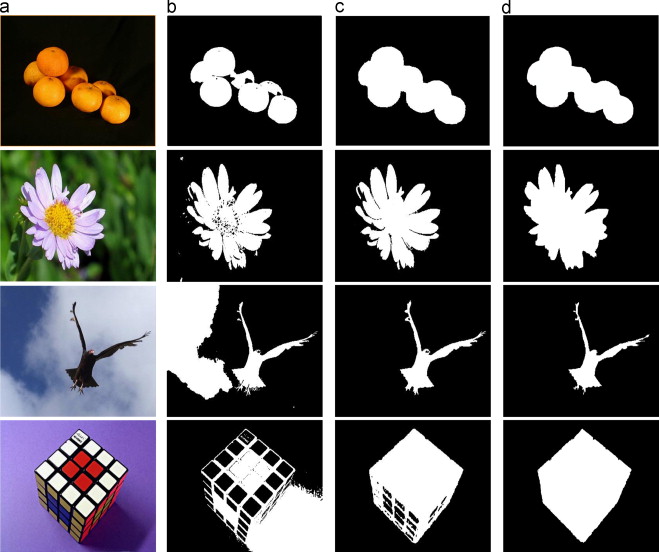

Threshold segmentation¶

Threshold segmentation¶

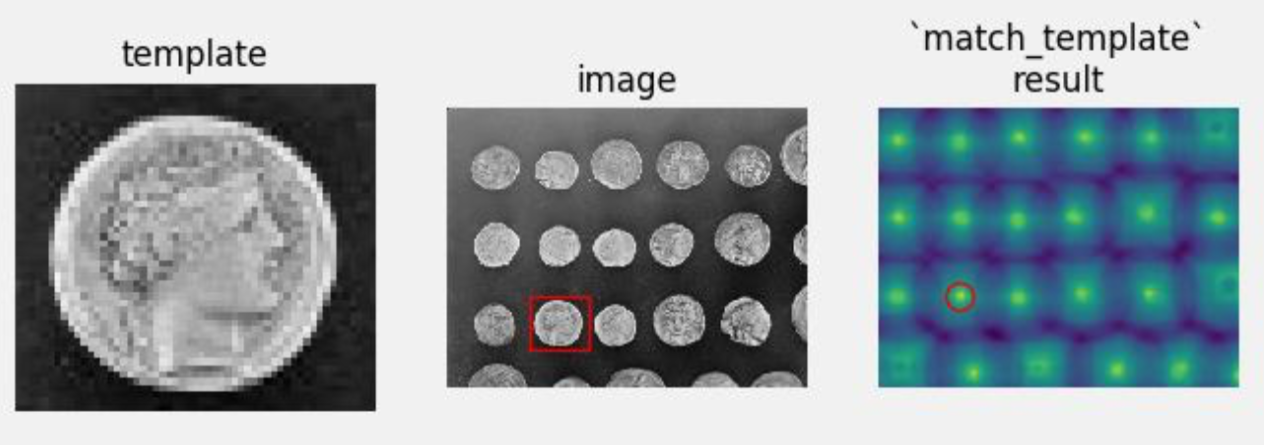

Template Matching¶

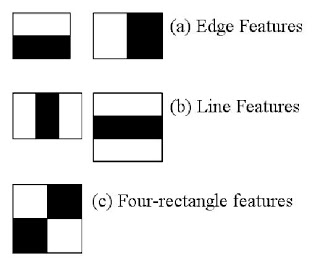

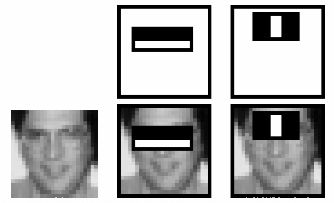

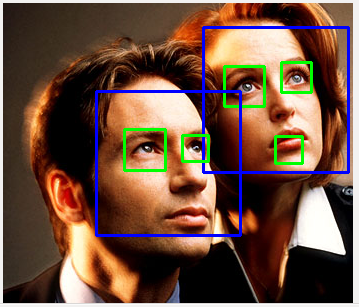

Object Detection with Viola-Jones and Haar¶

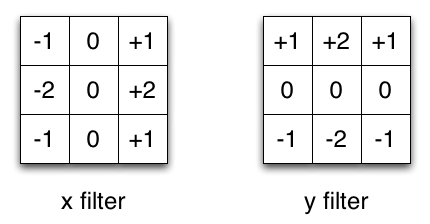

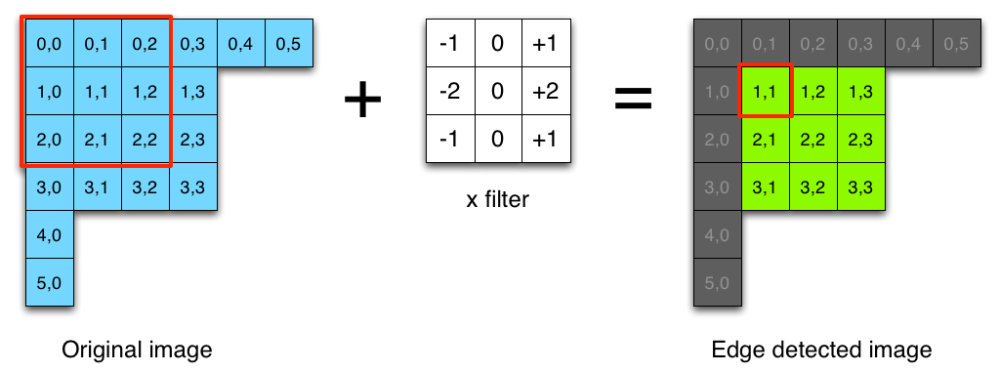

It is actually a convolution filter!¶

Result¶

Conv Nets!¶

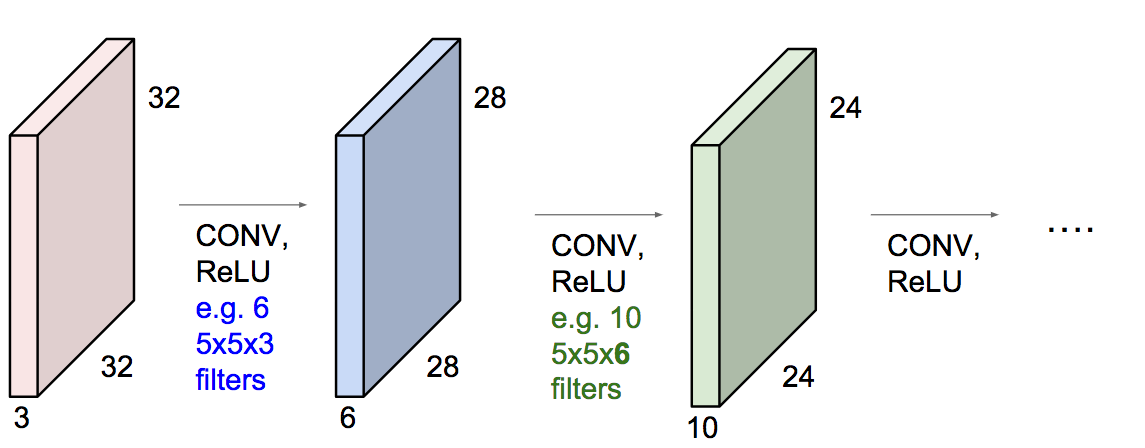

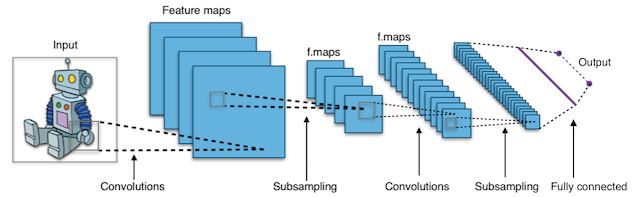

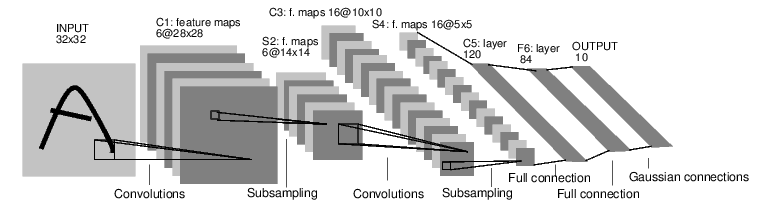

Typical CNN Architecture¶

Typical CNN Architecture¶

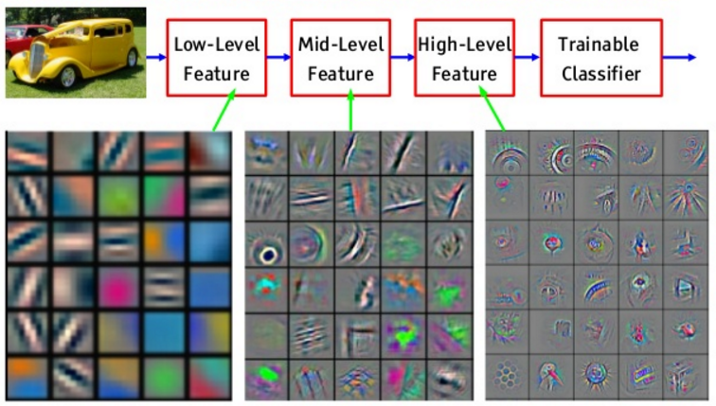

Features¶

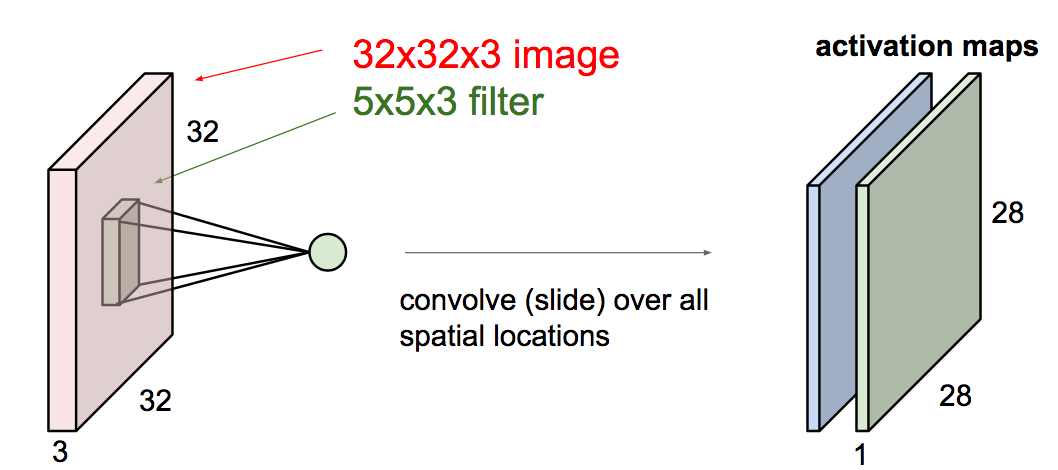

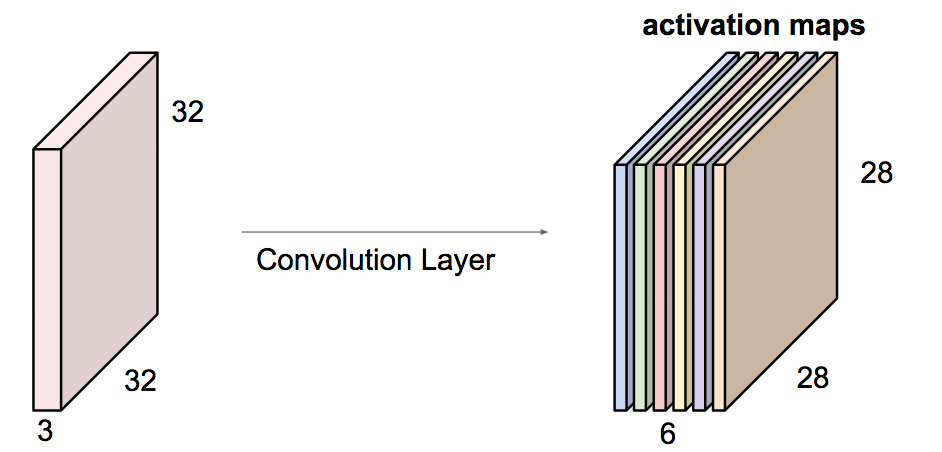

Key Specs of Conv Filters¶

- Number of filters

- Filter Size ($K$)

- Padding Size ($P$)

- Stride Size ($S$)

If number of input features is $N_{in}$ how to calculate number of output features $N_{out}$?

$$N_{out} = \frac{N_{in} + 2P - K}{S} + 1$$

Number of parameters to learn?¶

- Input: $32 \times 32 \times 3$

- $20$ Filters: $5 \times 5$, $P = 1$, $S = 2$

- Number of weights?

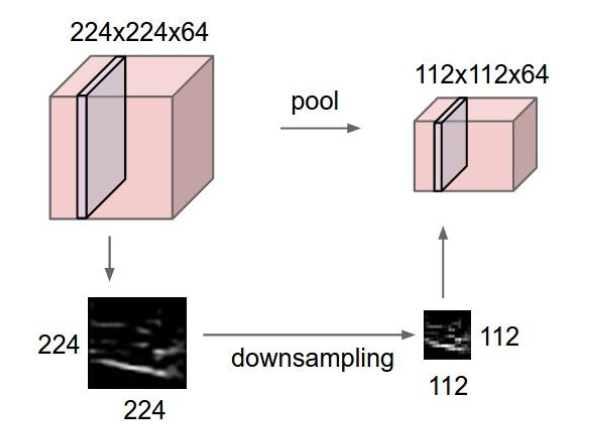

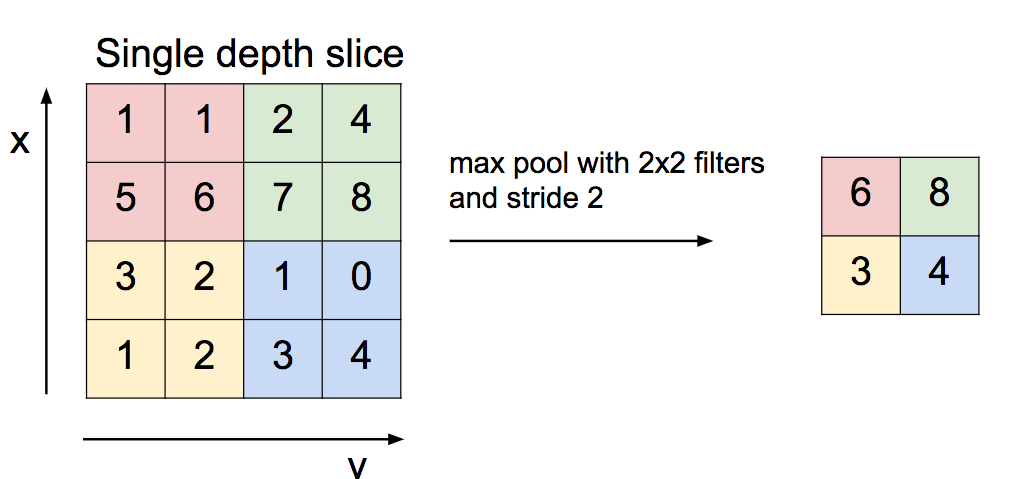

Pooling layers¶

Pooling layers¶

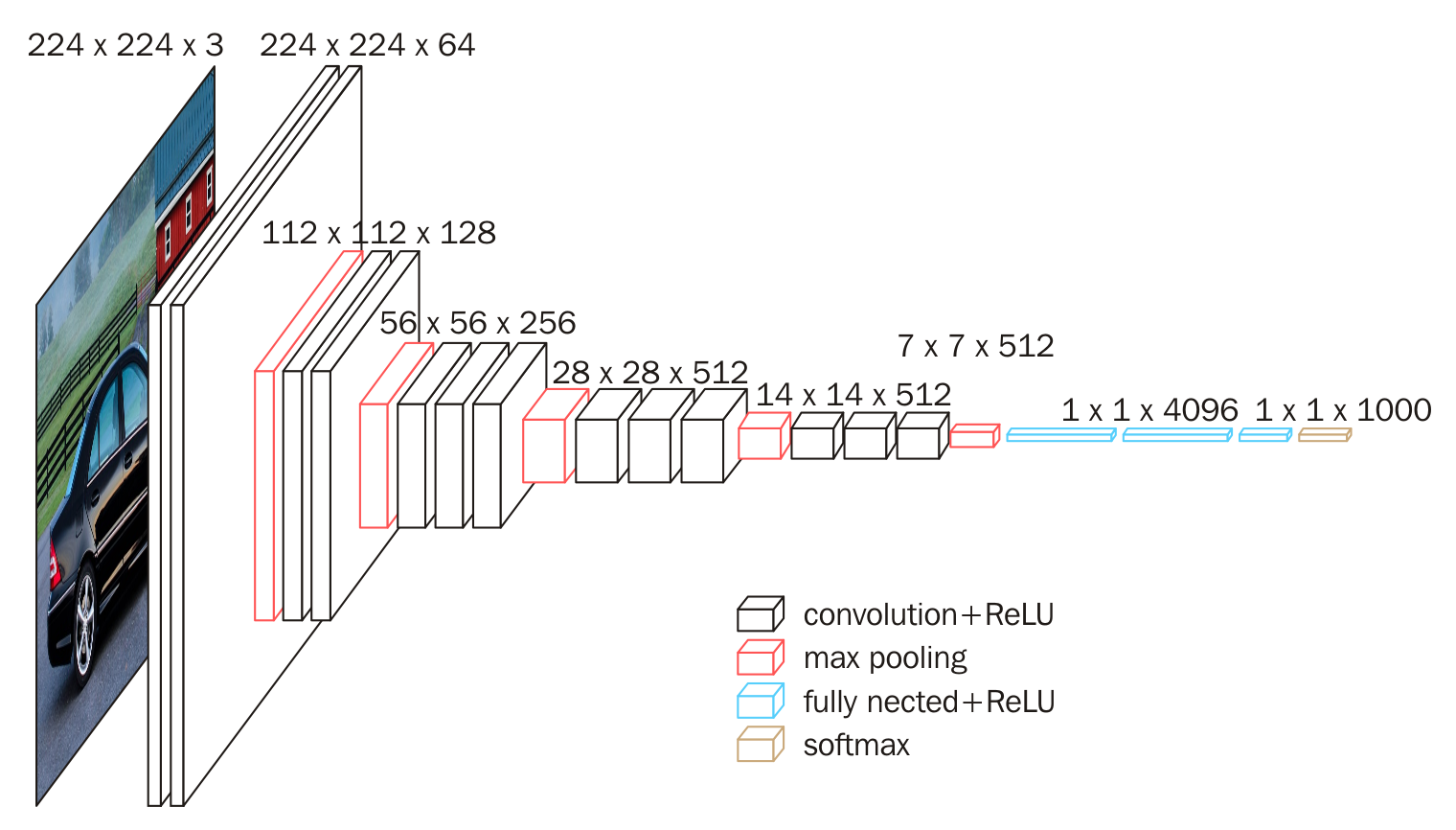

VGG 16¶

Other things to know¶

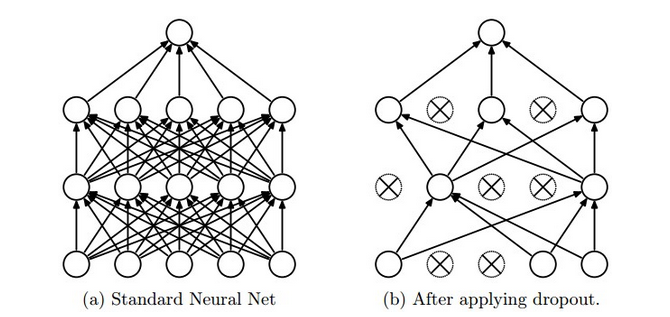

Dropout technique¶

- Have L1 and L2 regularization for weights

- Can complement it with Dropout

- Training: Dropout can be interpreted as sampling a Neural Network within the full Neural Network

- Testing: not applied

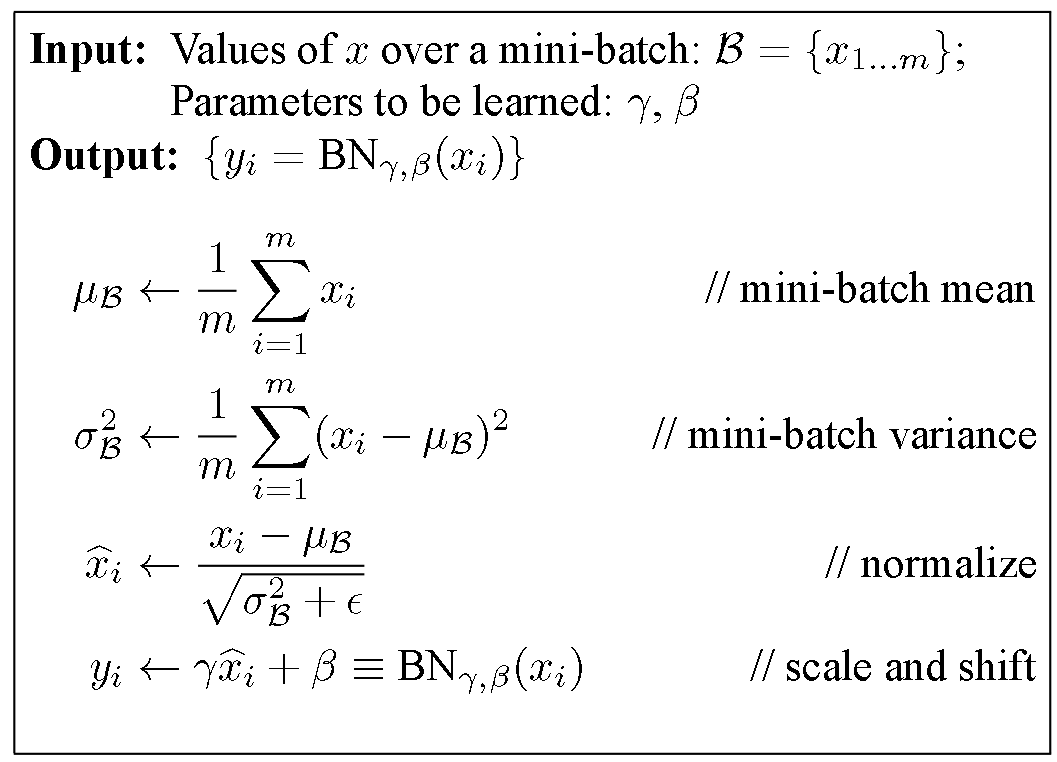

Batch Normalization¶

- Bad Weight Initialization

- Vanishing Gradients

- Normalize data right before non-linearities

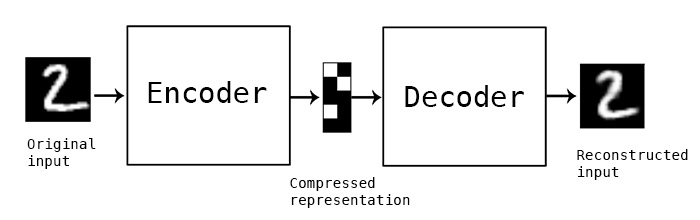

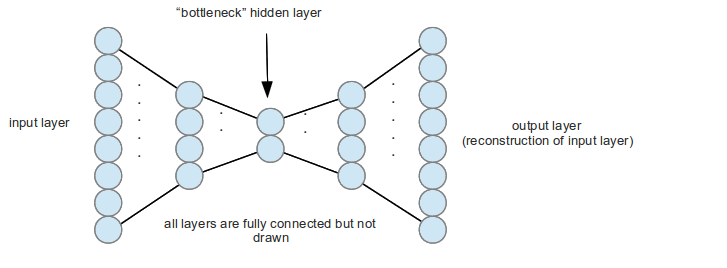

Autoencoders¶

- Autoencoders tries to recover input signal from compressed representation

- so they should save only the most valuable inforation!

- dimention reduction!

Autoencoders¶